Phandroid

Google details the crazy 3D mapping tech behind Google Camera’s new Lens Blur effect

Lens blur effects seem to be the latest trend in smartphone cameras. An attempt at simulating larger DLSR cameras with their big lenses and apertures, smartphones like the HTC One M8 or Samsung Galaxy S5 are now using a mixture of software and hardware to help create that soft depth of field, otherwise known as “bokeh.”

For the One M8, HTC achieves a shallow depth of field by using their “Duo Camera” system: 2 cameras that work like the human eye that help the phone distinguish what’s near and far, allowing users to go back into a photo and refocus as necessary. As we mentioned in our review, the feature seems to be hit or miss, rarely working out the way it would in a larger SLR.

With Samsung, their implementation is a bit different for the Galaxy S5 in a feature they call “selective focus.” The Gs5 camera quickly focuses close, then far, allowing the user to go back and refocus where they’d like, creating extra bokeh for a professional look. Again, just as we saw with HTC, the results are a mixed bag.

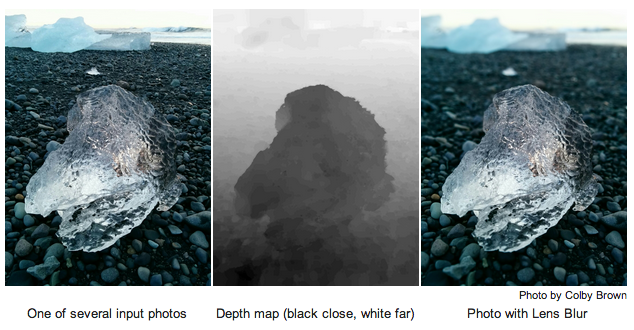

In the newly released Google Camera app (now available on Google Play), we now see Google taking a stab at the DoF issue, albeit approaching it a bit different from what we’re seeing with HTC or Samsung. Once again, it’s a combination of hardware and software working together for a feature they call Lens Blur. When taking a picture using Lens Blur, the user simply sweeps their camera up from the subject, somehow communicating back to the phone what’s near and far. To help detail exactly what the heck is going on behind the scenes, Google detailed everything in a blog post.

Apparently, Lens Blur uses much the same 3D mapping technology Google already uses for Google Maps Photo Tour and Google Earth — only the algorithm is shrunken down for your smartphone. When sweeping the camera up, your smartphone is taking a whole bunch of pictures, and Lens Blur uses an algorithm to convert that information into a 3D model. Lens Blur then estimates depth, as shown in the black and white image (above), by triangulating the position of the subject in relation to the background. Pretty neat.

It’s all pretty damn technical, and it’s mind blowing the amount of work that goes into making something as seemingly “simple” as Lens Blur work on your mobile device (even if it’s essentially just the of by-product of a much bigger technology). You can read up more on the technicals behind Lens Blur by visiting the link below.

0 comments:

Post a Comment